![]() Cannot be tested on an emulated robot, requires a real robot.

Cannot be tested on an emulated robot, requires a real robot.

Goal

In this tutorial, we will make Pepper:

These characteristics are some information such as for example spacial position, facial expressions, age and gender.

Prerequisites

Before stepping in this tutorial, you should be familiar with the robot focus and robot lifecycle notions.

For further details, see: Mastering Focus & Robot lifecycle.

Let’s start a new project

For further details, see: Creating a robot application.

Store the HumanAwareness service and the QiContext in the

MainActivity:

// Store the HumanAwareness service.

private var humanAwareness: HumanAwareness? = null

// The QiContext provided by the QiSDK.

private var qiContext: QiContext? = null

// Store the HumanAwareness service.

private HumanAwareness humanAwareness;

// The QiContext provided by the QiSDK.

private QiContext qiContext;

And put the following code in the onRobotFocusGained method:

// Store the provided QiContext.

this.qiContext = qiContext

// Get the HumanAwareness service from the QiContext.

humanAwareness = qiContext.humanAwareness

// Store the provided QiContext.

this.qiContext = qiContext;

// Get the HumanAwareness service from the QiContext.

humanAwareness = qiContext.getHumanAwareness();

Put the following code in the onRobotFocusLost method:

// Remove the QiContext.

this.qiContext = null

// Remove the QiContext.

this.qiContext = null;

Add the findHumansAround method to your MainActivity class:

private fun findHumansAround() {

// Here we will find the humans around the robot.

}

private void findHumansAround() {

// Here we will find the humans around the robot.

}

And call it in the onRobotFocusGained method:

findHumansAround()

findHumansAround();

The HumanAwareness interface allows us to get the humans around the robot

with the getHumansAround method.

Add the following code in the findHumansAround method:

// Get the humans around the robot.

val humansAroundFuture: Future<List<Human>>? = humanAwareness?.async()?.humansAround

// Get the humans around the robot.

Future<List<Human>> humansAroundFuture = humanAwareness.async().getHumansAround();

And add a lambda to get the humans:

humansAroundFuture?.andThenConsume { humansAround -> Log.i(TAG, "${humansAround.size} human(s) around.") }

humansAroundFuture.andThenConsume(humansAround -> Log.i(TAG, humansAround.size() + " human(s) around."));

We are now able to find humans. Next, you will learn how to display their characteristics.

Create the retrieveCharacteristics method:

private fun retrieveCharacteristics(humans: List<Human>) {

// Here we will retrieve the people characteristics.

}

private void retrieveCharacteristics(final List<Human> humans) {

// Here we will retrieve the people characteristics.

}

Call this method inside the lambda in the findHumansAround method:

humansAroundFuture.andThenConsume { humansAround ->

Log.i(TAG, "${humansAround.size} human(s) around.")

retrieveCharacteristics(humansAround)

}

humansAroundFuture.andThenConsume(humansAround -> {

Log.i(TAG, humansAround.size() + " human(s) around.");

retrieveCharacteristics(humansAround);

});

Add the following code to the retrieveCharacteristics method:

humans.forEachIndexed { index, human ->

// Get the characteristics.

val age: Int = human.estimatedAge.years

val gender: Gender = human.estimatedGender

val pleasureState: PleasureState = human.emotion.pleasure

val excitementState: ExcitementState = human.emotion.excitement

val engagementIntentionState: EngagementIntentionState = human.engagementIntention

val smileState: SmileState = human.facialExpressions.smile

val attentionState: AttentionState = human.attention

// Display the characteristics.

Log.i(TAG, "----- Human $index -----")

Log.i(TAG, "Age: $age year(s)")

Log.i(TAG, "Gender: $gender")

Log.i(TAG, "Pleasure state: $pleasureState")

Log.i(TAG, "Excitement state: $excitementState")

Log.i(TAG, "Engagement state: $engagementIntentionState")

Log.i(TAG, "Smile state: $smileState")

Log.i(TAG, "Attention state: $attentionState")

}

for (int i = 0; i < humans.size(); i++) {

// Get the human.

Human human = humans.get(i);

// Get the characteristics.

Integer age = human.getEstimatedAge().getYears();

Gender gender = human.getEstimatedGender();

PleasureState pleasureState = human.getEmotion().getPleasure();

ExcitementState excitementState = human.getEmotion().getExcitement();

EngagementIntentionState engagementIntentionState = human.getEngagementIntention();

SmileState smileState = human.getFacialExpressions().getSmile();

AttentionState attentionState = human.getAttention();

// Display the characteristics.

Log.i(TAG, "----- Human " + i + " -----");

Log.i(TAG, "Age: " + age + " year(s)");

Log.i(TAG, "Gender: " + gender);

Log.i(TAG, "Pleasure state: " + pleasureState);

Log.i(TAG, "Excitement state: " + excitementState);

Log.i(TAG, "Engagement state: " + engagementIntentionState);

Log.i(TAG, "Smile state: " + smileState);

Log.i(TAG, "Attention state: " + attentionState);

}

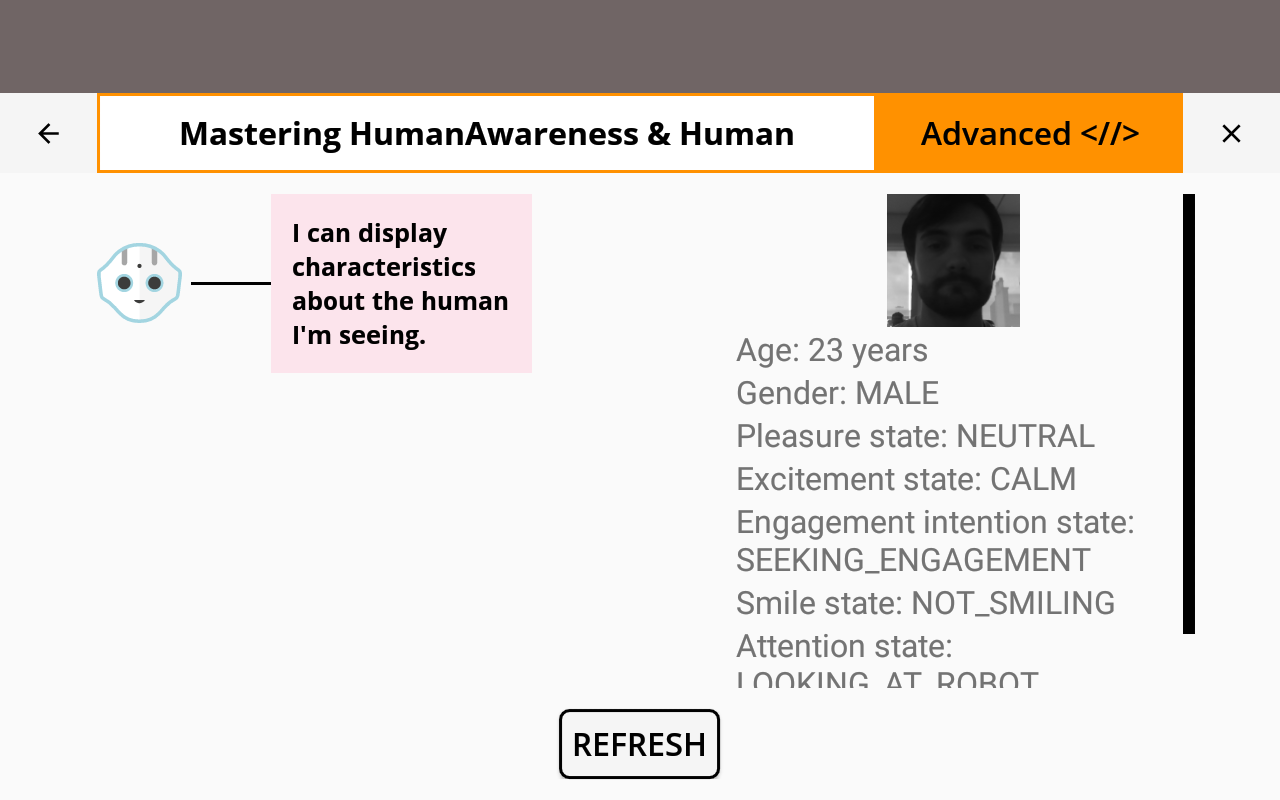

Here we display the following characteristics:

Let’s test our code using a button to refresh the characteristics.

In the activity_main.xml layout file, add the following Button:

<Button

android:id="@+id/refresh_button"

android:layout_width="wrap_content"

android:layout_height="wrap_content"

android:text="Refresh"/>

Find the button in the view in the onCreate method and add a listener on it:

// Find humans around when refresh button clicked.

refresh_button.setOnClickListener {

if (qiContext != null) {

findHumansAround()

}

}

// Find humans around when refresh button clicked.

Button refreshButton = (Button) findViewById(R.id.refresh_button);

refreshButton.setOnClickListener(v -> {

if (qiContext != null) {

findHumansAround();

}

});

![]() The sources for this tutorial are available on GitHub.

The sources for this tutorial are available on GitHub.

| Step | Action |

|---|---|

Install and run the application. For further details, see: Running an application. |

|

Choose “Detect humans”. Stay in front of Pepper. Try to smile or frown to test the emotion recognition capabilities, and look at the robot or somewhere else to test the attention functions. Test excitement by speaking loudly and waving hands vigorously in front of the robot. Note that age and gender only stabilize after 5s. |

|

| Click the refresh button to update the humans list and their characteristics. |

You are now able to retrieve people characteristics.

In this section, we will display the distance between the humans and the robot.

We will need:

To retrieve the robot frame, we use the Actuation interface.

The robot frame is available via the robotFrame method:

// Get the Actuation service from the QiContext.

val actuation: Actuation = qiContext.actuation

// Get the robot frame.

val robotFrame: Frame = actuation.robotFrame()

humans.forEachIndexed { index, human ->

// ... (Display the human characteristics)

}

// Get the Actuation service from the QiContext.

Actuation actuation = qiContext.getActuation();

// Get the robot frame.

Frame robotFrame = actuation.robotFrame();

for (int i = 0; i < humans.size(); i++) {

// ... (Display the human characteristics)

}

One of the people characteristics is the head position.

We can retrieve it with the getHeadFrame method:

humans.forEachIndexed { index, human ->

// ... (Get the characteristics)

val humanFrame: Frame = human.headFrame

// ... (Display the characteristics)

}

// ... (Get the robot frame)

for (int i = 0; i < humans.size(); i++) {

// Get the human.

Human human = humans.get(i);

// ... (Get the characteristics)

Frame humanFrame = human.getHeadFrame();

// ... (Display the characteristics)

}

We now have access to both the human frame and the robot frame. With these Frames, we will be able to compute the distance between the human and the robot.

Create the computeDistance method:

private fun computeDistance(humanFrame: Frame, robotFrame: Frame): Double {

// Here we will compute the distance between the human and the robot.

return 0.0

}

private double computeDistance(Frame humanFrame, Frame robotFrame) {

// Here we will compute the distance between the human and the robot.

return 0;

}

Call this method in the for loop where we got the human frame:

// Compute the distance.

val distance: Double = computeDistance(humanFrame, robotFrame)

// Display the distance between the human and the robot.

Log.i(TAG, "Distance: $distance meter(s).")

// Compute the distance.

double distance = computeDistance(humanFrame, robotFrame);

// Display the distance between the human and the robot.

Log.i(TAG, "Distance: " + distance + " meter(s).");

We can determine the transform between two frames by using the

computeTransform method.

In the computeDistance method, add the following code:

// Get the TransformTime between the human frame and the robot frame.

val transformTime: TransformTime = humanFrame.computeTransform(robotFrame)

// Get the TransformTime between the human frame and the robot frame.

TransformTime transformTime = humanFrame.computeTransform(robotFrame);

Here we get a TransformTime , which is composed of a Transform component and a time component.

The Transform represents the transform between the robot position and

the human position.

The time component corresponds to the last time the human location was updated.

For our example, we only need the transform part:

// Get the transform.

val transform: Transform = transformTime.transform

// Get the transform.

Transform transform = transformTime.getTransform();

Then we extract the translation part:

// Get the translation.

val translation: Vector3 = transform.translation

// Get the translation.

Vector3 translation = transform.getTranslation();

We get the x and y components of the translation.

// Get the x and y components of the translation.

double x = translation.x

double y = translation.y

// Get the x and y components of the translation.

double x = translation.getX();

double y = translation.getY();

Then we return the corresponding distance.

// Compute the distance and return it.

return sqrt(x * x + y * y)

// Compute the distance and return it.

return Math.sqrt(x * x + y * y);

In this section, we will show the face pictures of the humans around.

Add the following code to the retrieveCharacteristics method when getting characteristics for each human:

// Get face picture.

val facePictureBuffer: ByteBuffer = human.facePicture.image.data

facePictureBuffer.rewind()

val pictureBufferSize: Int = facePictureBuffer.remaining()

val facePictureArray: ByteArray = ByteArray(pictureBufferSize)

facePictureBuffer.get(facePictureArray)

// Get face picture.

ByteBuffer facePictureBuffer = human.getFacePicture().getImage().getData();

facePictureBuffer.rewind();

int pictureBufferSize = facePictureBuffer.remaining();

byte[] facePictureArray = new byte[pictureBufferSize];

facePictureBuffer.get(facePictureArray);

Then decode the byte array to bitmap.

Note that the byte array will be empty until the human face picture passes filtering conditions at least once.

var facePicture: Bitmap? = null

// Test if the robot has an empty picture

if (pictureBufferSize != 0) {

Log.i(TAG, "Picture available")

facePicture = BitmapFactory.decodeByteArray(facePictureArray, 0, pictureBufferSize)

} else {

Log.i(TAG, "Picture not available")

}

Bitmap facePicture = null;

// Test if the robot has an empty picture

if (pictureBufferSize != 0) {

Log.i(TAG, "Picture available");

facePicture = BitmapFactory.decodeByteArray(facePictureArray, 0, pictureBufferSize);

} else {

Log.i(TAG, "Picture not available");

}

| Step | Action |

|---|---|

| Run the application and stay in front of Pepper. | |

| You should see the log traces “1 human(s) around.” and then your characteristics, including the distance between you and the robot and your face picture. | |

| You can click on the refresh button to update the humans list and their characteristics. |